Back to sbisbee.com

This is an archived copy. View the original at https://www.f5.com/company/blog/whether-to-allow-and-adopt-ai-with-lessons-from-the-public-cloud

Whether to Allow and Adopt AI with Lessons from the Public Cloud

April 6, 2023

Sam Bisbee

The ability to operationalize data better than competitors has proven to

be a winning advantage for businesses, so it follows that what feels like

incessant discussions of ChatGPT and generative AI are rapidly building

into a wave of “fire-aim-ready” product releases and early startups being

funded. These capabilities are many orders of magnitude advancements if

they work and scale safely, accurately, with objectivity, and so on. This

feels like the early days of public cloud, both in terms of hype and

technical advantage, but this time it will be harder because the leap is

so much greater.

Amazon Web Services (AWS) launched SQS in 2004 followed by S3 and EC2 in

2006. If we accept these as the start of the public cloud timeline, or

when it became widely usable, then that means businesses that have not

adopted the public cloud by now have accepted two decades of opportunity

cost. Public cloud was probably too risky for regulated enterprises in

2006, but what about in 2016 or 2026? We’ve seen financial institutions,

governments, and other traditionally risk adverse organizations adopt the

public cloud for some-to-all of their applications and data. The benefits,

such as for those learning to operationalize their data and how to scale

the underlying technology simultaneously, have been so great that anyone

who hasn’t begun their adoption may be too late to close the advantage

they’ve ceded.

Unlike public cloud, businesses will not have decades to evaluate and

adopt capabilities like AI if they wish to remain competitive, a concern

for businesses since the AI timeline does not start with ChatGPT. The

space is moving too fast, and the capabilities appear to be many orders of

magnitude more advanced than what’s likely to be available from

alternative technologies in the short term. Conversational AI with

familiar user interfaces like ChatGPT has allowed for more rapid adoption

by a more diverse group of people without requiring specialist knowledge

like the public cloud did and still does. New adopters believe it is

innovative while those steeped in the space point to other advancements,

wondering why so many people are suddenly paying attention.

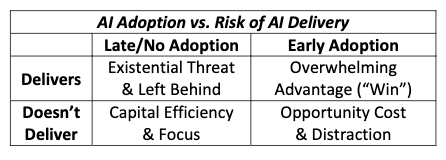

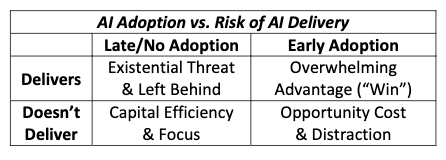

Those who adopt before their competitors will have an overwhelming

advantage if the technology delivers and they will be granted leniency

when (not if) they misstep early in their adoption curve. “The space is

early in its evolution and we’re all learning, but here at <ACME>

we remain leaders in this exciting and competitive…” Generative AI will

probably write the press release and markets will quickly forget the

smoking crater while rewarding the next iteration like the many consumer

safety and data breach headlines we’ve become accustomed to.

Like public cloud, adoption will occur regardless of concerns from legal,

IT, security, and compliance. For the first movers, their adoption will be

direct, embedding AI in their processes and products. This will drive

indirect adoption for the “wait and see” businesses as they will use

software or SaaS that has AI embedded in it whether they know it or not,

meaning their data will enter these systems if they haven’t already. Some

of these will be obvious, like technology companies quick to accept risk

and assess consequences after deployment, but the more difficult risk for

security programs to manage will be applications of AI below the waterline

like those we’ve already seen in finance and insurance. We continue to see

evidence in breach analysis that third-party risk management is unable to

adequately inventory downstream data use and sub-service providers below

the waterline, especially for less regulated data classifications that do

not fall into scope for the likes of GDPR and HIPAA and others, so

security programs will likely not know about or understand the usage of AI

in their vendor supply chain either.

Given the attention ChatGPT has generated with its usability and

performance, the probability that an employee has sent some proprietary or

confidential information from your business into ChatGPT is approaching

100%. Congratulations, your business has adopted generative AI even if you

asked employees not to. This feeling should be familiar because it’s

likely how you felt when you found someone in engineering swiped their

credit card to deploy their team’s new application on the public cloud

only to be rewarded by the business for their faster time to market,

innovative approach, and embracing bleeding-edge technology. In most

environments today, asking a technology team to not use public cloud is a

lot like asking an employee to not use Google’s search in their day-to-day

job.

This is where security must get ahead of adoption and guide it along a

risk-appropriate path, using their subject matter expertise to help their

peers develop a risk-appropriate investment thesis, maturity model, and

roadmap before third parties and regulators try to prescribe their own

approach. Before jumping into the depths of NIST’s

Artificial Intelligence Risk Management Framework (AI RMF 1.0) and

performing gap analysis to your current practices, these are some

example starter conversations security teams should be exploring

internally and with their peers:

This is where security must get ahead of adoption and guide it along a

risk-appropriate path, using their subject matter expertise to help their

peers develop a risk-appropriate investment thesis, maturity model, and

roadmap before third parties and regulators try to prescribe their own

approach. Before jumping into the depths of NIST’s

Artificial Intelligence Risk Management Framework (AI RMF 1.0) and

performing gap analysis to your current practices, these are some

example starter conversations security teams should be exploring

internally and with their peers:

-

How do we determine how much of its promised value is delivered,

aspirational, or improbable? What properties would we need to see

before we invest in an experiment versus product development or some

greater investment level?

-

What is the likelihood our competitors adopt before we do? If they’re

successful, is follow-on adoption the only way for us to close the gap

or will they have gained an insurmountable advantage?

-

Is this decision a one-way or two-way door? Are there known risks

associated with adoption? What is the material impact if we do adopt

and find novel risks?

-

To what degree can we own this technology internally and keep positive

control over our data and systems? Do we have, or can we reasonably

obtain, the necessary talent? Is it safer to rely on a third-party

service provider for this technology?

None of these questions mention ChatGPT or AI. They are generally

reasonable conversation starters for any risk-based security program to

evaluate early technologies ahead of adoption whether it be bring your own

device (BYOD), public cloud, containers, machine learning, or eventually

AI. This was not the approach that many teams took with the public cloud,

and they were caught flat-footed and unaware when they realized how much

it was used directly and indirectly in their organizations. Threat actors

were not slow to adapt as they learned and exploited the properties of

public cloud faster than most consumers—all technology is dual use.

The direction and

adoption of technology is hard-to-impossible to predict, but that it will

continue accelerating is predictable. If the current or a near-future

iteration of AI hits a wall and stops advancing, or AI proves

unviable at larger technical scales or for cost reasons, it will have

already achieved a usable and investable state for businesses. If AI does

not hit a wall, then it will likely become an expected investment and

means of interacting with data, products, and businesses as we have

already seen happen in sales, marketing, healthcare, and finance. So, it

is a current reality—not a future one—that security teams must contend

with how to evolve their data security and third-party risk management

practices to contend with this technology. They must catch up with and

pull ahead of the already growing adoption so that they don’t have to

relive the experience of public cloud racing ahead of cloud security.

This is where security must get ahead of adoption and guide it along a

risk-appropriate path, using their subject matter expertise to help their

peers develop a risk-appropriate investment thesis, maturity model, and

roadmap before third parties and regulators try to prescribe their own

approach. Before jumping into the depths of NIST’s

Artificial Intelligence Risk Management Framework (AI RMF 1.0) and

performing gap analysis to your current practices, these are some

example starter conversations security teams should be exploring

internally and with their peers:

This is where security must get ahead of adoption and guide it along a

risk-appropriate path, using their subject matter expertise to help their

peers develop a risk-appropriate investment thesis, maturity model, and

roadmap before third parties and regulators try to prescribe their own

approach. Before jumping into the depths of NIST’s

Artificial Intelligence Risk Management Framework (AI RMF 1.0) and

performing gap analysis to your current practices, these are some

example starter conversations security teams should be exploring

internally and with their peers: